Software Optimization

Embedded Machine Learning: Software Optimization

About

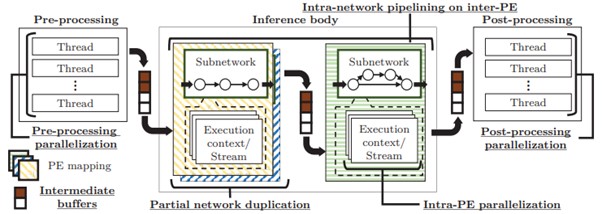

As deep learning inference applications are increasing in embedded devices, an embedded device tends to equip neural processing units (NPUs) in addition to a multi-core CPU and a GPU. For fast and efficient development of deep learning applications, Software optimization technique is provided as the SDK for high-performance inference that delivers low latency and high-throughput for deep learning inference applications. We proposed various optimization parameters to accelerate a deep learning application with heterogeneous processors including multi-threading, pipelining, buffer assignment, and network duplication. Since the design space of allocating layers to diverse processing elements and optimizing other parameters is huge, we devise a parameter optimization methodology that consists of a heuristic for balancing pipeline stages among heterogeneous processors and fine-tuning process for optimizing parameters.